Agentic AI vs. Automation: Why You're (Probably) Not Building Real Agents

You're Not Really Building Agents – And That’s Okay

Executive Summary

Most AI Use Cases labeled as “agents” today are either automated scripts or LLMs calling functions. True agents are goal driven, autonomous and adaptive. They decide how to act, not just what to call.

Understanding the difference helps you:

- Build smarter architectures

- Invest in the right AI technologies

- Avoid hype fueled traps

Why This Matters (Especially to CTOs and CEOs)

We're at peak agent hype. Websites, sales pitches and product roadmaps are flooded with the term. But:

In 99 percent of cases, you’re building automation, not agents.

That’s not bad. But if you expect adaptability, learning, and dynamic problem solving from a script, you’ll be disappointed.

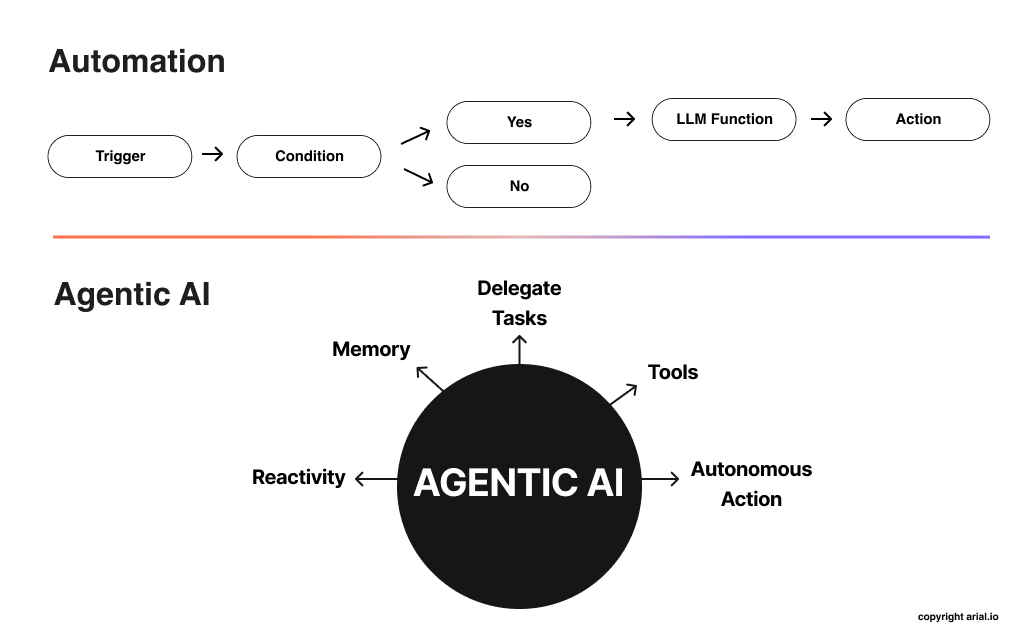

Automation: Simple, Efficient, Not Intelligent

Definition:

Software that executes predefined steps, repeatedly and deterministically.

Traits:

- No autonomy

- No planning or learning

- Focus on efficiency

Example:

Phillip writes a script that reads a CSV, adds timestamps and saves it.

Is this an agent?

No. It performs a static task with no awareness or goal.

Function Calling: Smart Interfaces, Still No Autonomy

Function calling lets LLMs interact with APIs. Smart, yes. But not agentic.

Definition:

LLMs detect when to call tools or functions, then generate the correct input.

Traits:

- LLM chooses tool usage

- Single turn or linear process

- No overarching strategy or learning

Example:

Phillip connects a weather API to an LLM. The LLM returns tomorrow’s forecast based on a prompt.

Is this an agent?

No. It responds intelligently, but it doesn’t decide why or what next.

True Agents: Goal Driven, Adaptive, Autonomous

Now we enter actual agentic behavior.

Agents have a mission. They perceive, plan, act, and adapt over time.

Definition (AI Theory):

An agent:

- Perceives the environment

- Acts based on observations

- Adapts its strategy

- Maximizes success based on a goal

Key Traits:

- Goal oriented: Operates toward an outcome

- Plans and replans: Adjusts strategy dynamically

- Learns from feedback: Adapts based on outcomes

- Has memory: Maintains internal state and context

- Uses tools: But does so strategically, not linearly

Non Agent Example: Research Assistant

Phillip builds a research assistant that:

1. Runs a web search

2. Summarizes results

3. Sends an email

Is this an agent?

No. It is a pipeline, not a reasoning entity. There is no adaptive logic or internal decision making.

Agent Example: API Security Agent

Mission: Identify potential vulnerabilities in a set of internal APIs using limited tools:

scan_open_ports()probe_endpoint_security()simulate_attack_vector()

The agent:

- Selects which API or endpoint to test based on prior results

- Maintains a log of already scanned components to avoid duplication

- Switches methods if a scan fails or yields incomplete results

- Adapts strategy based on observed responses (e.g., rate limits, error codes, authentication challenges)

- Ends the process once a defined security confidence threshold is reached or vulnerabilities are found

Yes, this is a true agent.

Real World Example: Voice AI as a True Agent

Let’s say you're a fashion retailer offering in store personal styling sessions. You want customers to call and book appointments, handled entirely by AI.

Old school automation:

"Press 1 for hours. Press 2 to book."

No. This is automation.

Modern voice assistant:

"I'd like to book for Friday."

LLM extracts intent, calls the booking API.

No. This is function calling. Smart but not autonomous.

Agentic AI version:

Book a personalized fitting, based on customer constraints and store capacity.

The agent:

- Handles vague input such as "sometime next week"

- Accesses multiple calendars

- Resolves conflicts

- Recommends alternatives

- Adapts to errors or cancellations

- Maintains memory of preferences

Yes, this is a true agent.

Business Impact:

- Higher booking conversion

- Better customer experience

- Lower staff load

- Long term learning and personalization

This agent is not reading a script. It is solving a moving, human problem in real time.

Why the Confusion?

1. Marketing Hype:

“Agent” sounds cooler than “script.”

2. Tool Confusion:

Function calling is not reasoning. Tools are not agents.

3. Smart is not Autonomous:

Even advanced automation isn’t adaptive.

4. Common Goal: Reduce Human Effort

But how that goal is reached matters a lot.

What You Should Do

Use the right tech for the right problem.

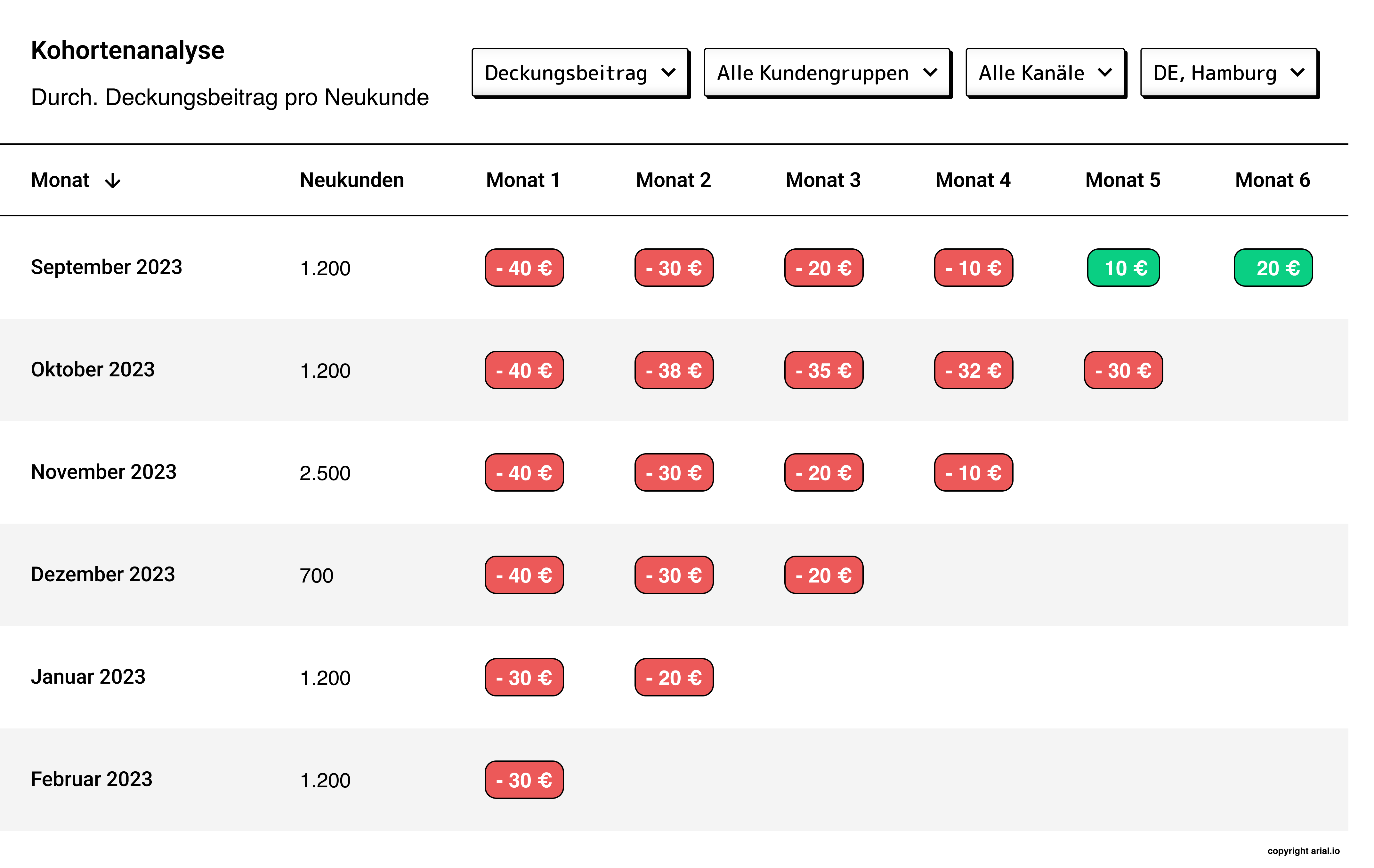

Use Case Approach

Repetitive task Automation

Extend LLM with tools Function Calling

Adaptive goal pursuit Agentic AI

Ask yourself:

If I gave this system a goal and walked away, could it figure it out?

If the answer is no, it is not an agent.

CEO and CTO Level Cheat Sheet

Concept What It Does Is it an Agent?

Automation Executes predefined steps No

Function Calling Calls tools based on prompts No

Agent Autonomously plans, adapts, and acts Yes

Conclusion: Stop Faking Agents. Start Thinking Autonomy

We are entering the age of AI systems that don’t just act, they decide.

Know what you are building. Call it what it is. And when the time is right:

Don’t automate. Don’t orchestrate. Deploy an agent.